Neither artificial nor particularly intelligent. That's AI according to Kate Crawford. An eclectic researcher who divides her time between Annenberg University of California, Sydney University, and the Microsoft Research Lab in New York, Crawford has devoted two decades of study to the political and social implications of big data and machine learning systems.

In her latest book, Atlas of AI, she takes apart myths and narratives about artificial intelligence in order to bring the issue back to a more serious and realistic analysis of the environmental impacts and social consequences of a system based on a chain of extractivism and exploitation of labor that is anything but immaterial. We spoke with her about data, power, and the often hidden costs of a technology that is, in fact, already transforming the world.

Let’s start from the very beginning: how can we “build” an intelligence?

That is still very much an open question. Intelligence can mean so many different things, and the term shifts over time. As a species, humans are currently spending enormous amounts of resources building large-scale computationally intensive systems that can do predictive analytics at scale. However, this is quite a different thing from embodied, relational human intelligence. John McCarthy, who coined the term artificial intelligence in 1956, had real reservations about the name.

Herbert Simon had a very different but more accurate name: “complex information processing.” I think the field would be much better off if Herbert Simon’s name had stuck – it would avoid much of the cult-like mythologizing so that we can focus on the reality of these technologies – and their planetary costs.

Indeed, the dominant narrative describes AI as a sort of magic, but it is much more about data collection and statistics than about a real “ability”. As you wrote, “artificial intelligence is not intelligent”.

The current wave of generative AI has only increased the amount of mystification and hype around these computational approaches. The historian of science Alex Campolo and I called this “enchanted determinism” – that is, the way that AI systems are seen as magic, alien, or superhuman, yet also trusted to produce results with deterministic accuracy. These systems are just probabilistic statistics at scale.

You talk about a reductionist approach that is inherent in the way AI has been developed from data and classification. What are the risks of this reductionism?

AI systems are defined by the data used to train them and the logic of classification they are optimized to achieve. The training data shapes the foundations of what is possible: creating a worldview. This is very much in contrast to the traditional way we think about “intelligence” as unbounded, in flux, embodied, self-aware, and in relation to other entities. AI is statistical probability at scale, and that kind of engineering is about flattening complexity to produce an answer, an image, or a piece of code. The risk of this reductionism is that we will fail to see it for what it is, and in doing so, put our trust in these systems without seeing their very real downsides.

In addition to not being intelligent, AI is not even "artificial", as you wrote. What does it mean?

AI systems are profoundly material. But the imaginaries of AI are ethereal: visions of immaterial code, abstract mathematics, and algorithms in the cloud. On the contrary, AI is made of minerals, energy, and vast amounts of water. Data centers generate immense heat that contributes to climate change and the construction and maintenance of AI depends on underpaid labor in the Global South. In this sense, as in many others, there is nothing artificial about AI.

So, AI as a system – or as a “mega-machine”, as you describe it – is deeply based on extractivism and exploitation of resources. What are the main supply chains involved?

AI supply chains start in the mines, with low wage workers mining rare minerals, cobalt, lithium, and other materials from the ground. These then become the hardware that AI systems rely upon. However, there are also the data supply chains – training data, inference data, testing and validation data – often extracted from the public internet or bought via data brokers. Finally, there is the labor supply chain, with low wage clickworkers giving “human feedback” as part of reinforcement learning models, labeling datasets, and in some cases, even posing as AI chatbots.

You have exposed the false myth of AI and the Cloud as a clean industry. What are the real environmental impacts of this pretended “immaterial” sector? Do we have an overall figure on its carbon footprint?

Estimates vary, but the consensus is that AI’s carbon footprint is growing rapidly. According to a recent paper, global data center electricity consumption has grown by 20-40% annually in recent years, reaching 1-1.3% of global electricity demand. Another study indicates that the AI industry will shortly be consuming as much energy as a country the size of the Netherlands. And of course, with generative AI, the carbon footprint and water consumption are increasing even more rapidly, but it’s still too soon to have detailed figures.

You have an ongoing visual art project on technology and power together with Vladan Joler, which is now exhibited in Milan at the Prada Foundation. Why did you choose the visual arts to communicate your research?

One of the central questions driving my work is: how can we understand the operations of technology and power in our era? Our technological systems, from AI chatbots to international border checks, are increasingly automated and opaque. Social institutions, from schools to prisons, are becoming data industries, incorporating pervasive forms of data capture and analysis. The current transformations in AI have concentrated power into ever fewer hands, accelerating polarization and alienation at a time when democracy is already in turmoil. If we are to address these urgent challenges – technocratic control, climate catastrophe, and wealth inequality – we need to see how they are interwoven. To show that is a challenge, but something that we wanted to do in a visual format.

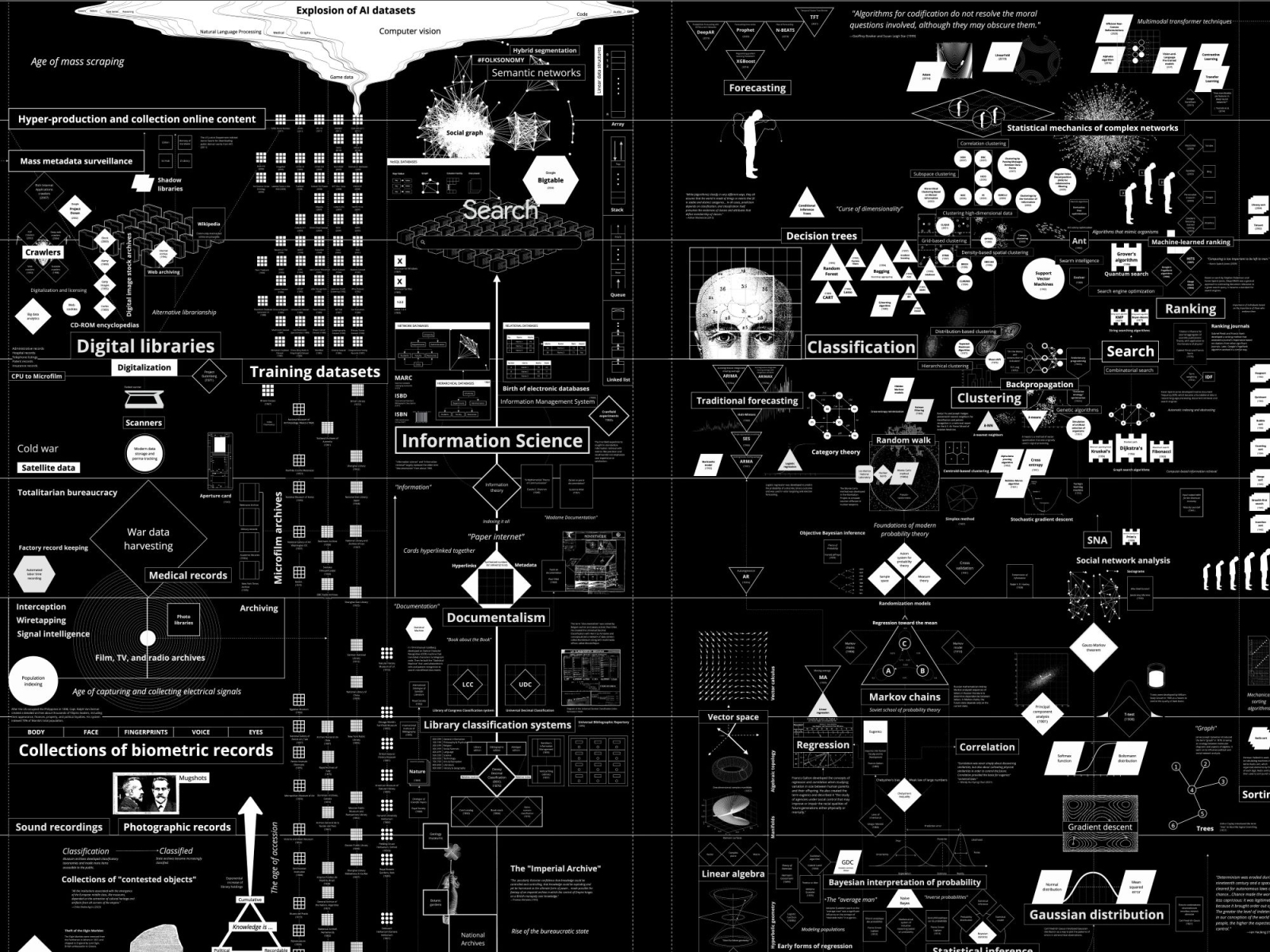

The project is called Calculating Empires: A Genealogy of Technology and Power, 1500–2025. This exhibition centers on a massive visualization, that I designed with my long-time collaborator Vladan Joler, to span five centuries. Like a detailed circuit diagram, we mapped out the pathways of imperial power across many systems – colonialism, militarization, and automation. By seeing how past empires have made their calculations, we hope that gallery visitors will be better able to calculate the costs of our current technological empires.

DOWNLOAD AND READ ISSUE #48 OF RENEWABLE MATTER ON AI & TECH

This article is also available in Italian / Questo articolo è disponibile anche in italiano

The cover image is taken from the project Calculating Empires: A Genealogy of Technology and Power, 1500-2025 by Kate Crawford and Vladan Joler, on exhibit at the Fondazione Prada - Milan

© all rights reserved